Many of you have probably seen the special edition of Nature that is devoted to

"The Future of the PhD". Much of the discussion centers on the career prospects of those with a PhD. As most of those in graduate school know, there are far more eager 1st-year graduate students than tenure-track positions at R1 universities - and often to have a shot at the few positions available at R1 universities one has to slog through multiple low-paying post-docs after a median of 7 years in a PhD program. Part of Nature's special feature includes an editorial entitled "Fix the PhD". But here's my question to those of us in grad school: in your experience, does the PhD system need fixing?

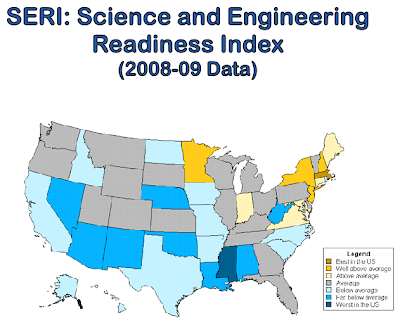

Before we jump into the debate, let me share a little bit of data. First, Nature has put together a few nice set of graphs showing three relevant tidbits on key aspects of the PhD experience - namely the number of PhDs awarded by field, the median time to completion for the hard sciences, and the employment of science and engineering PhDs 1-3 years after graduation.

Several things that stood out to me. First, medial and life sciences saw a huge increase in PhD production and many of the anecdotal horror stories I have heard come from those fields. Second, a median of 7 years in grad school seems high to me - using data from the past 15 years in my department I have personally computed a mean time to completion of 6 years for my program. Finally, I was surprised not to see a growth in the number of non-tenured faculty.

Other sources have clearly indicated that the ranks of the non-tenured have been growing, but apparently not with new PhDs.

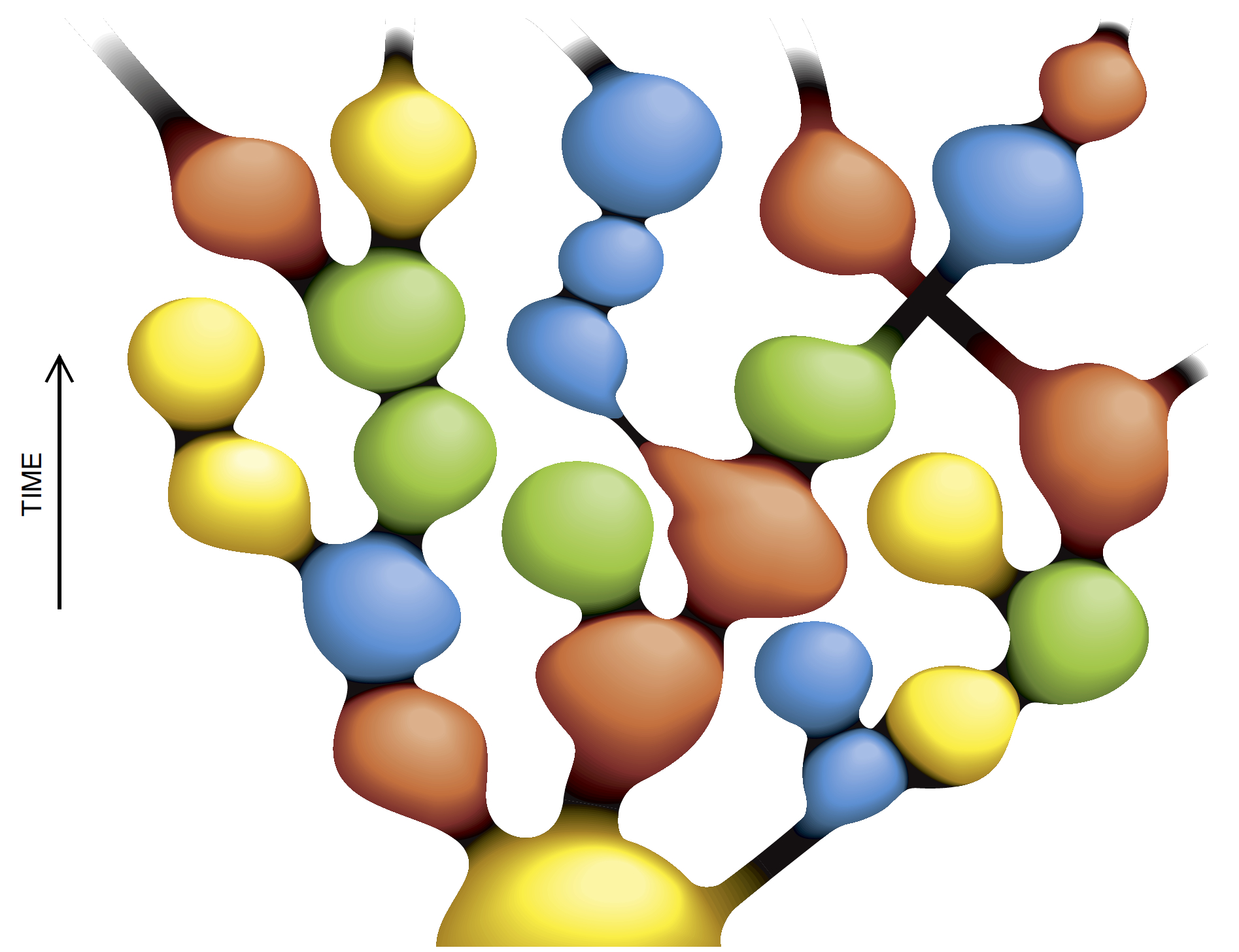

The second bit of data I would like to inject comes from my own department. CU's Astrophysical and Planetary Sciences department is pretty good, but I would say that CU is somewhat average when it comes to the top-tier of the astrophysics world. So in the hope that CU's PhDs are in some sense "average", I decided to track all 43 of the PhD recipients from my department between 2000 and 2005 using Google and

ADS in order to see where they were now. I sorted them into 7 categories (post-doc, tenure-track faculty at research institutions, tenure-track faculty at non-research institutions, non-tenure-track faculty, research staff, industry, or other). The results are on your left. Note that all of those that still post-docs graduated in 2005. Interestingly, only 1 of the 43 PhDs is in a non-tenure track faculty position and a very large fraction (67.4%) are still publishing in peer-reviewed journals in astronomy, physics, or planetary science. As a side-note, the "other" category has some great entries, including a fellow that works for

Answers in Genesis, another that works for a

foundation that advocates for manta rays in Hawaii, and another that does market research for

Kaiser Permanente.

So, there's a bit of data - more is of course welcome - now what does it mean? Is the PhD system in the US broken and if so, how does one fix it?